In today’s digital landscape, data is at the core of every organization’s operations. As businesses increasingly rely on cloud computing, choosing the right storage solution has become crucial for ensuring seamless data management, scalability, and cost-effectiveness. Among the leading cloud service providers, Amazon Web Services (AWS) offers a comprehensive suite of AWS storage types. These options accommodate a wide range of workloads and use cases. To optimize your storage infrastructure and maximize the benefits of the cloud, it is essential that you:

- Understand the differences between the various AWS storage types and;

- Know which storage type best suits your specific workload.

This article delves into the various AWS service options available, exploring their features, benefits, and use cases. The aim is to help you make informed decisions and select the most suitable storage solution for your workload.

Let’s start with the storage types and their functionality – you can use this information to determine your requirements.

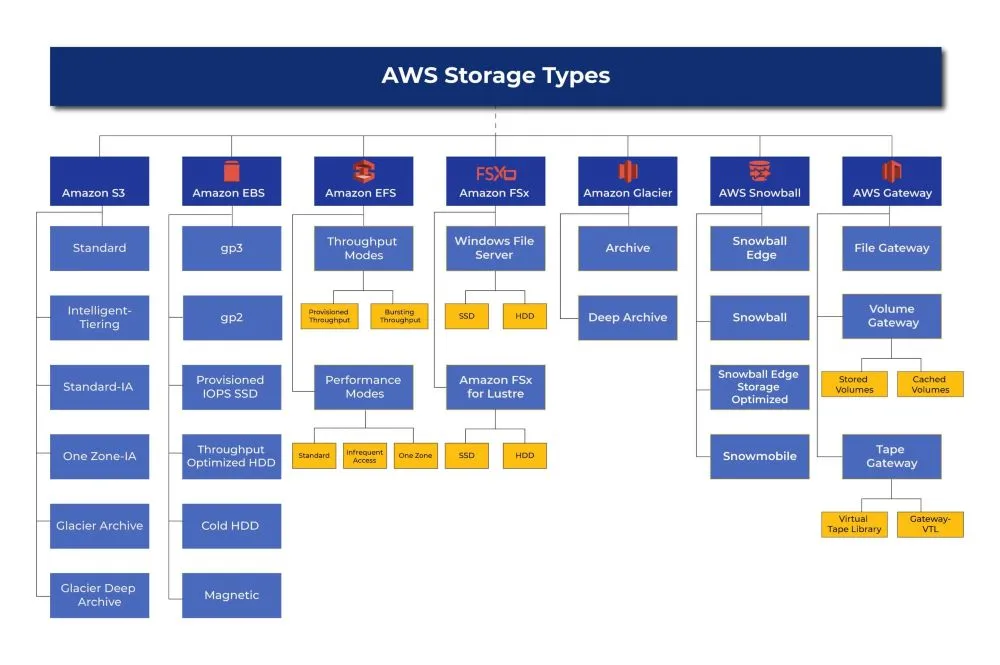

AWS Storage Types

-

Amazon Simple Storage Service (S3)

Amazon Simple Storage Service (S3) is one of the most widely used and versatile storage services offered by AWS. It provides object storage designed to store and retrieve any amount of data from anywhere on the web.

S3 is highly scalable, durable, and secure, making it suitable for a broad range of use cases.

- Standard: The Standard storage class is the default option and offers high durability, availability, and performance. It is designed for frequently accessed data and provides low-latency retrieval.

- Intelligent-Tiering: The Intelligent-Tiering storage class automatically moves data between two access tiers based on usage patterns. It optimizes costs by automatically moving data that you haven’t accessed for a specified period to a lower-cost tier, while keeping frequently accessed data in a higher-cost tier for faster access.

- Standard-IA (Infrequent Access): The Standard-IA storage class is suitable for data that is accessed less frequently but still requires low-latency retrieval when needed. It offers lower storage costs compared to the Standard class but with slightly higher per-request costs.

- One Zone-IA (One Zone Infrequent Access): The One Zone-IA storage class is similar to Standard-IA but stores data in a single Availability Zone instead of replicating it across multiple zones. This reduces costs but introduces higher risk in case of an outage in that specific Availability Zone.

- Glacier (Archive): Glacier is a cost-effective storage class designed for long-term archival and backup purposes. It provides high durability but with longer retrieval times, ranging from minutes to hours. It is suitable for data that you access rarely yet the data requires long-term retention.

- Glacier Deep Archive: Glacier Deep Archive is the most cost-effective storage option for long-term data archiving. It offers the lowest storage costs but with the longest retrieval times, ranging from hours to days. It is ideal for data that is rarely accessed and can tolerate extended retrieval times.

Amazon S3 provides high durability, with data automatically distributed across multiple devices and facilities within a region. This ensures redundancy and protects against hardware failures. It also offers advanced security features that enable fine-grained access control. These include:

- Server-side encryption

- Access control policies

- Integration with AWS Identity and Access Management (IAM)

S3 also supports various storage management features, which give you over data retention, backup strategies, and compliance requirements. These features include:

- Versioning

- Lifecycle policies

- Event notifications

- Cross-region replication

With its scalability, durability, and rich feature set, Amazon S3 is suitable for a wide range of use cases. These use cases include:

- Backup and restore

- Content distribution

- Data archiving

- Log storage and analysis

- Static website hosting

- Cloud-native application data storage.

S3’s versatility and reliability make it a fundamental building block for many AWS cloud-based solutions.

-

Amazon Elastic Block Store (EBS)

Amazon Elastic Block Store (EBS) provides block-level storage volumes that you can attach to Amazon EC2 instances. It offers persistent and durable storage for EC2 instances, allowing you to store data and access it even after the instance is stopped or terminated. EBS volumes are highly available and reliable, making them suitable for a wide range of workloads, including databases, enterprise applications, and file systems.

EBS offers different volume types to cater to different performance requirements and use cases:

- General Purpose SSD (gp3): This technology enables customers to provision performance independent of storage capacity while offering up to 20% lower price per GB. With gp3 volumes, customers can scale IOPS (input/output operations per second) and throughput without needing to provision additional block storage capacity. This means customers only pay for the storage they need. The new GP3 volumes are designed to provide a predictable baseline performance of 3,000 IOPS regardless of the volume size.

- General Purpose SSD (gp2): The General Purpose SSD volume type balances price and performance for a variety of workloads. It offers a baseline performance of 3 IOPS (input/output operations per second) per GB (gigabytes) and the ability to burst to higher levels for short periods. This makes it suitable for most applications that require consistent performance at a reasonable cost.

- Provisioned IOPS SSD (io1): This volume type is designed for workloads that require predictable and consistent I/O performance. It allows you to provision a specific number of IOPS and provides low-latency, high-performance storage. This is particularly useful for latency-sensitive applications, such as databases and I/O-intensive workloads.

- Throughput Optimized HDD (st1): This volume type is optimized for large, sequential I/O workloads that require high throughput at a low cost. It provides cost-effective storage for big data processing, log processing, and data warehouses.

- Cold HDD (sc1): The Cold HDD volume type is designed for infrequently accessed workloads with large data sets. It offers the lowest cost per GB among EBS volume types but with lower throughput and higher latency compared to other types. It is suitable for use cases such as data backups, long-term archives, and disaster recovery.

- Magnetic (standard): The Magnetic volume type is the original EBS volume type, offering the lowest cost per GB of all EBS options. It is suitable for workloads with small I/O requirements, where cost is the primary consideration over performance.

You can customize each EBS volume type with specific sizes, allowing you to select the appropriate storage capacity for your workload. Additionally, EBS volumes support features such as snapshots for data backup and replication, enabling data durability and disaster recovery.

EBS volumes are highly reliable and automatically replicated within the same Availability Zone to protect against hardware failures. They can also be easily resized, detached from one EC2 instance, and attached to another, providing flexibility and scalability as your storage needs evolve.

By offering a variety of volume types with different performance characteristics, Amazon EBS enables you to choose the storage option that best matches your application’s requirements, balancing performance, cost, and durability.

-

Amazon Elastic File System (EFS)

Amazon Elastic File System (EFS) is a fully managed, scalable, and elastic file storage service that provides shared file storage for EC2 instances. It is designed to be highly available and durable, allowing multiple EC2 instances to access the same file system simultaneously. EFS supports the Network File System (NFS) protocol, making it compatible with a wide range of Linux-based applications and workloads.

EFS offers the following types of throughput modes:

- Bursting Throughput: In the bursting throughput mode, the file system provides baseline throughput levels with the ability to burst to higher throughput levels for short periods. This mode is suitable for workloads with unpredictable or sporadic access patterns that require occasional bursts of higher throughput.

- Provisioned Throughput: In the provisioned throughput mode, you can specify the desired throughput capacity for your file system. This mode is ideal for workloads that require a predictable and sustained level of throughput, ensuring consistent performance.

Amazon EFS automatically scales its storage capacity and throughput as the data within the file system grows, making it highly scalable and eliminating the need for manual capacity management. It also offers automatic file system backups, data redundancy across multiple Availability Zones, and high durability.

EFS provides the following storage classes or performance modes:

- Standard: The Standard storage class offers a balance of price, performance, and throughput. It provides low-latency access to files and is suitable for a wide range of workloads and applications.

- Infrequent Access (IA): The Infrequent Access storage class is designed for files that are accessed less frequently. It offers lower storage costs compared to the Standard class but with slightly higher per-request costs. This mode is suitable for use cases where data is infrequently accessed but needs to be readily available when required.

- One Zone (IA): The One Zone storage class is similar to the IA class but stores data in a single Availability Zone instead of replicating it across multiple zones. This reduces costs but introduces higher risks in case of an outage in that specific Availability Zone.

EFS integrates well with other AWS services, such as Amazon EC2, AWS Identity and Access Management (IAM), and AWS CloudTrail, providing seamless integration into your AWS infrastructure. You can access it through multiple EC2 instances across different Availability Zones within a region, making it suitable for scenarios that require shared access to files and high levels of throughput.

With its elastic scalability, high availability, and compatibility with a wide range of applications, Amazon EFS is an excellent choice for shared file storage needs in cloud-based environments. It simplifies file sharing across multiple instances and supports a variety of workloads, including content management systems, web serving, data analytics, and container storage.

-

Amazon FSx

Amazon FSx is a fully managed file storage service provided by AWS. It makes it easy to launch and run file systems. FSx offers two main types of file systems: Amazon FSx for Windows File Server and Amazon FSx for Lustre. These file systems are designed to provide high performance, scalability, and compatibility with existing applications and workflows.

- Amazon FSx for Windows File Server: Amazon FSx for Windows File Server provides fully managed, native Windows file systems that are accessible from Windows-based EC2 instances. It supports the SMB (Server Message Block) protocol and provides a Windows file system experience, making it suitable for Windows applications that require shared file storage.

Amazon FSx for Windows File Server offers several performance and deployment options:

- SSD (Solid State Drive): The SSD storage option provides low-latency and high-performance file storage for Windows workloads. It is optimized for I/O-intensive applications and provides sub-millisecond latencies.

- HDD (Hard Disk Drive): The HDD storage option provides cost-effective file storage for Windows workloads with lower performance requirements. It offers high throughput and is suitable for sequential read and write workloads.

- Amazon FSx for Lustre: Amazon FSx for Lustre is a high-performance file system designed for compute-intensive workloads that require fast access to shared file storage. It is particularly well-suited for HPC (High-Performance Computing), machine learning, and data analytics applications.

FSx for Lustre offers the following performance options:

- SSD: The SSD storage option provides high-performance, low-latency file storage for workloads that require fast data access. It is suitable for I/O-intensive workloads and provides consistent performance at scale.

- HDD: The HDD storage option provides cost-effective file storage for workloads with large datasets and high throughput requirements. It offers good performance for sequential workloads. Moreover, AWS has optimized it for applications that require high throughput rather than low latency.

Amazon FSx handles the underlying infrastructure, including data redundancy, maintenance, and backups, providing a managed and reliable file storage service. It integrates seamlessly with other AWS services, such as Amazon S3, Amazon EC2, and AWS Directory Service, making it easy to incorporate FSx into existing workflows and environments.

By offering both Windows-based and Lustre-based file systems with different performance options, Amazon FSx enables you to choose the most appropriate file storage solution for your specific workload requirements. Whether you need a native Windows file system or a high-performance file system for compute-intensive applications, FSx simplifies the deployment and management of file storage, allowing you to focus on your core business objectives.

-

Amazon Glacier

AWS has developed Amazon Glacier as an AWS cloud security service designed for long-term data archiving and backup. It provides secure, durable, and extremely low-cost storage for data that requires long-term retention, but you access it infrequently. Glacier is ideal for businesses that need to store large amounts of data for compliance, regulatory, or archival purposes.

Amazon Glacier offers two storage classes:

- Archive: The Archive storage class is the most cost-effective option. It is suitable for data that you rarely access, but this data can tolerate longer retrieval times. AWS designed it to enable long-term retention. It offers the lowest storage costs among all the AWS storage services. Retrieving data from Glacier typically takes several hours.

- Deep Archive: AWS has designed the Deep Archive storage class for data that is accessed very rarely and can tolerate even longer retrieval times, ranging from hours to days. It offers the lowest storage costs among all AWS storage services. Therefore, it is ideal for data that you must store for the longest possible durations. Retrieving data from Deep Archive can take several hours to days.

Amazon Glacier provides secure storage by automatically encrypting data at rest using AES-256 encryption. It also offers a range of security features, including access control policies, retrieval limits, and Vault Lock for write-once-read-many (WORM) compliance.

To manage data in Glacier, AWS provides the Amazon S3 Glacier API and the AWS Management Console. These tools allow you to create and manage vaults, store and retrieve archives, and set data retrieval policies.

Glacier offers integration with other AWS services for seamless data lifecycle management. For example, you can use AWS S3 lifecycle policies to automatically transition objects from S3 to Glacier after a specified period. This simplifies the process of archiving data and reduces storage costs.

By leveraging the low-cost, highly durable, and secure storage of Amazon Glacier, businesses can efficiently store and protect large volumes of data for long-term archival purposes. It is particularly valuable for organizations that have regulatory compliance requirements or need to store data for extended periods while keeping costs at a minimum.

-

AWS Snowball

AWS Snowball is a data transfer service that allows you to physically move large amounts of data to and from the AWS cloud using rugged, secure, and portable devices called Snowball devices. Snowball addresses the challenges of transferring large data sets over the network, particularly when you have limited internet bandwidth or data transfer times are too long.

The AWS Snowball family consists of the following devices:

- Snowball Edge: Snowball Edge is a data transfer and edge computing device that combines data transfer capabilities with compute and storage capabilities. It allows you to process and analyze data on the device itself before transferring it to AWS. Snowball Edge is useful in situations where there is a need for local data processing or offline data migration.

- Snowball: The original Snowball device is a secure, rugged, and portable storage appliance designed to transfer large amounts of data. Each Snowball device has a storage capacity of up to 80 TB (terabytes). You can request one or more Snowball devices from AWS, which will be shipped to your location. You can then load your data onto the device and ship it back to AWS, where the data will be securely transferred to your desired AWS storage service.

- Snowball Edge Storage Optimized: The Snowball Edge Storage Optimized device provides high-capacity storage for data transfer and edge computing. It offers a storage capacity of up to 80 TB, with all the data transfer and computing capabilities of Snowball Edge.

- Snowmobile: Snowmobile is an exabyte-scale data transfer solution. It is a secure shipping container that can transport up to 100 PB (petabytes) of data. AWS delivers the Snowmobile to your location, and you can load your data onto it. AWS then securely transfers the data to the AWS cloud. Moreover, AWS has designed Snowmobile for extremely large data sets and helps reduce the time it takes to transfer massive amounts of data.

Snowball devices are tamper-resistant, water-resistant, and equipped with built-in encryption to ensure the security and integrity of your data during transit. You can seamlessly integrate them with AWS services and manage them using the AWS Management Console.

The use of Snowball devices significantly reduces the time and costs associated with transferring large amounts of data. It is particularly beneficial for organizations with limited internet bandwidth or strict compliance requirements that prevent direct data transfer over the network. With Snowball, you can securely and efficiently migrate data to AWS or retrieve data from AWS, regardless of the size of your data sets.

-

AWS Storage Gateway

AWS Storage Gateway is a hybrid cloud storage service that enables seamless integration between on-premises environments and AWS cloud storage. It provides a bridge between your on-premises infrastructure and various AWS storage services, allowing you to securely store and retrieve data in the cloud while maintaining local access to your data.

The AWS Storage Gateway offers the following different types of gateways to suit different use cases:

- File Gateway: The File Gateway provides a file interface, allowing you to access files stored in Amazon S3 buckets as NFS (Network File System) shares. It seamlessly integrates with existing on-premises file-based applications, providing a scalable and cost-effective file storage solution. File Gateway supports features like file compression, deduplication, and encryption. It also offers low-latency access to frequently accessed files.

- Volume Gateway:

- Stored Volumes: The Stored Volumes gateway presents a block storage interface, where your on-premises applications can store data as EBS (Elastic Block Store) snapshots. It stores data locally on the gateway in the form of EBS snapshots, while asynchronously backing up the snapshots to Amazon S3 for durability. This gateway type is ideal for scenarios where you require low-latency access to frequently accessed data.

- Cached Volumes: The Cached Volumes gateway stores data primarily in Amazon S3 while retaining frequently accessed data locally. It provides seamless integration with Amazon S3, allowing you to scale your storage capacity to petabytes without provisioning additional on-premises storage hardware. This gateway type is suitable for workloads where cost-effective storage and the ability to scale seamlessly are essential.

- Tape Gateway:

- Virtual Tape Library (VTL): The Virtual Tape Library gateway emulates a tape library and presents virtual tapes to your existing backup applications as iSCSI (Internet Small Computer System Interface) devices. It allows you to replace physical tape libraries with scalable and cost-effective virtual tapes stored in Amazon S3 or Glacier. This gateway type simplifies the transition from on-premises tape-based backups to cloud-based storage.

- Gateway-VTL: The Gateway-VTL gateway offers a direct connection to a physical tape infrastructure using the iSCSI protocol. It enables you to integrate your existing tape backup infrastructure with the AWS cloud services, allowing you to archive data to Amazon S3 or Glacier for long-term retention.

AWS Storage Gateway is easy to deploy and manage using the AWS Management Console. It offers features like data compression, encryption, and bandwidth management to optimize data transfer and ensure data security. The gateway also provides local caching capabilities to improve performance and reduce latency.

By using AWS Storage Gateway, organizations can extend the benefits of cloud storage to their on-premises environments, enabling hybrid cloud architectures and leveraging the scalability and durability of AWS storage services. It simplifies multi-cloud data management, backup, and disaster recovery, allowing businesses to seamlessly integrate their existing infrastructure with the cloud.

How to Choose the Right Storage Type

When choosing the appropriate storage type for your workload, you must consider the specific requirements and characteristics of each AWS storage option.

Amazon Simple Storage Service (S3)

- Ideal for scalable object storage.

- Suitable for storing and retrieving large amounts of data easily.

EBS

- General Purpose SSD (gp2) for balanced performance and throughput.

- Throughput Optimized HDD (st1) for sequential workloads.

- Choose based on block-level storage requirements for EC2 instances.

EFS

- Provides shared file storage with high availability.

- Offers different throughput modes like bursting and provisioned.

Amazon Glacier

- Cost-effective option for long-term data archiving.

- Deep Archive storage class for rarely accessed data.

AWS Snowball

- Physically move large data sets securely and efficiently.

- Suitable for situations with limited internet bandwidth or long transfer times.

AWS Storage Gateway

- Acts as a bridge between on-premises environments and AWS cloud storage.

- File Gateway for seamless integration with file-based applications.

- Volume Gateway (Stored and Cached) for block storage needs.

- Tape Gateway (VTL and Gateway-VTL) for tape-based backups and archiving.

Amazon FSx

Amazon FSx for Windows File Server is works best in scenarios that require Windows file shares, such as Windows-based applications and workloads. It offers native compatibility with Windows applications and supports the SMB protocol. It provides high-performance file shares, built-in backup and restore capabilities, and is suitable for data analytics and machine learning workloads. Additionally, it can serve as a centralized storage solution for Windows home directories.

Amazon FSx for Windows File Server is ideal for organizations that need a fully managed file storage service for their Windows-based applications and workloads.

- It offers seamless migration of existing Windows file shares to the cloud and provides high-performance file access using the SMB protocol.

- With built-in backup and restore capabilities, it ensures data protection and supports data analytics and machine learning workloads.

- It serves as a reliable solution for centralized storage of Windows home directories.

Consider the specific requirements of your workload, such as data access patterns, performance needs, scalability, and durability, to choose the most suitable AWS storage type.

Wrap Up

To sum it up, understanding the different AWS storage options is crucial for selecting the appropriate storage solution for your workload. AWS offers a range of services to meet your needs, whether you require scalable object storage, block-level storage, shared file storage, or long-term data archiving. Additionally, AWS provides efficient data transfer and seamless integration between on-premises and cloud storage.

By considering factors such as data access patterns, performance requirements, scalability, and cost-effectiveness, you can make an informed decision about which storage option to choose. AWS provides a comprehensive suite of storage services, allowing you to optimize data management, enhance performance, and ensure data durability. Additionally, it simplifies storage operations.

Selecting the right AWS storage option will enable you to efficiently store, manage, and access your data, empowering your business to leverage the full potential of the cloud.

Still need help choosing the right AWS storage type? Drop us a line at [email protected] to book a free consultation session with our DevOps & Cloud team. Xavor is a proud AWS partner, and our team will understand your unique business needs and advise you which AWS storage option you should go with.