Computer vision is undoubtedly one of the most exciting and powerful artificial intelligence (AI) technologies. Many of us interact with computer vision without even knowing. As a technology, computer vision, also known as machine vision, is fast gaining popularity.

So, what is it? And why is it gaining so much traction these days?

This article gives a comprehensive overview of how computer vision works, how it works, and some of its use cases.

Let’s dive!

What is Computer Vision?

Computer vision is a field of artificial intelligence solutions that trains and enables machines to make sense of the world using visual inputs like images and videos. It also uses deep learning algorithms to identify and classify objects based on visual information. Moreover, computer vision enables computers to take action or react to what they are fed visually.

As a branch of AI, computer vision is quite similar to human vision, miming how humans “see” objects and react to them. However, human sight surpasses AI-based computer vision by being able to put sensory input into context. Humans can tell how far away an object is, whether stationary or moving, and how it differs from another object.

But that does not mean computer vision is limited or incapable of meeting and surpassing human vision. Computer vision aims to imitate the function of human sight by doing everything that human vision allows you to do, e.g., tell how far an object is. Once you train a machine to analyze production defects in a product, the AI-powered system becomes capable of vetting hundreds of products in a short time.

Thus, computer vision exceeds the abilities of human vision under given circumstances. No wonder, then, that sectors ranging from automobile manufacturing to services like hotels are using computer vision to improve business outcomes.

Machine vision is not a new phenomenon. It has its roots in the late 1950s and early ‘60s when neurophysiologists conducted an experiment where they showed multiple images to a cat, aiming to match the response in its brain. They discovered that image processing begins with identifying hard edges and simple shapes.

Computer vision has grown rapidly since the 1990s when the internet took off. It was in the early 2000s that the first real-time facial recognition applications emerged. And the work has never stopped since.

The History of Computer Vision

Computer vision began in 1959 when image-scanning technology was developed. This allowed scientists to understand how the human brain processes visual information. A few years later, AI emerged as a specialized field of study.

With these advancements, a breakthrough happened in 1963 when computers transformed two-dimensional images into three-dimensional forms. However, computers at that time could only perceive simple objects and struggled to recognize more complex objects.

The next big milestone came in 1974 when optical character recognition (OCR) technology was introduced. Computers could now recognize text printed in any font or typeface. OCR was quickly followed by ICR (Intelligent Character Recognition), which could decipher hand-written text using neural networks.

In 1982, neuroscientist David Marr reinvigorated computational neuroscience. He established that vision is hierarchical, meaning some elements attract more attention. This would fundamentally change how computer vision works in the future. Marr also developed algorithms that allowed machines to detect edges, corners, and other basic shapes.

Shortly after, computer scientist Kunihiko Fukushima developed a network of cells called the Neocognitron that could recognize patterns.

The focus shifted to object recognition and real-time face recognition during the 2000s. The 2010s was the era of deep learning. Researchers used deep learning technology to train computers with millions of images, creating ImageNet. ImageNet simplified feature extraction and provided a foundation for contemporary convolutional neural networks (CNNs) and deep learning models.

Today, CNNs are the standard framework for how computer vision works. With CNNs, computer vision applications develop more complex networks with near human-like accuracy.

How Does Computer Vision Work?

Computer vision requires vast volumes of data to work. It analyzes data repeatedly until it can notice the difference between various visual inputs and recognize them.

Let’s take an example to explain this further. Suppose you want to use machine vision to determine a dog’s breed. This would require you to feed the computer many images showing dog breeds. You would also need to provide the computer with all the colors of a breed to identify the breed correctly. A tedious task! So, how do you achieve this?

Machine vision engineers use two primary technologies: deep learning (a subset of machine learning) and Convolutional Neural Networks (CNN).

Deep learning uses algorithms that allow a computer to train itself to analyze the context of visual data. Feeding enough data to your laptop uses algorithmic models to “see” the data and learn how to identify and discern visual inputs. Thus, you don’t need to program a computer to recognize a picture – it knows how to do it independently.

A convolutional neural network (CNN) deconstructs images into pixels with labels/tags to help a deep learning model “see.” It uses these tags to carry out convolutions (mathematical operations) and guesses what it is “seeing.” The network then runs continuous convolutions in iterations to check the precision of its guesses. This process continues until the guesswork starts becoming more precise and accurate.

CNNs are employed to understand single images, whereas recurrent neural networks (RNNs) are used to interpret video content.

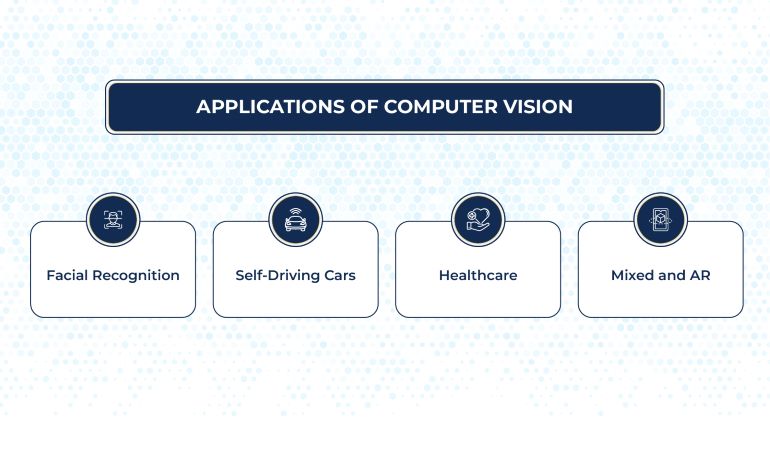

Computer Vision Applications

Incredible money is spent researching how computer vision can help various industries bolster their products or business processes. However, research is only one aspect of it. The true power of machine vision is demonstrated by its real-world applications – from healthcare and crime detection to automotive manufacturing.

A critical factor that can enable the spread of computer vision applications is the vast volumes of visual data available today. This data comes from various sources, like CCTVs, security cameras, smartphones, traffic cameras, and other visually-equipped devices. However, this data is hardly used to enhance deep learning models for computer vision.

Nevertheless, we have some excellent examples of industries and products where machine vision plays a vital role.

Let’s look at these.

-

Facial Recognition

Computer vision plays a crucial role in enabling facial recognition in software applications. Smartphones and other machines use facial recognition to identify people. It works through machine vision algorithms that identify facial patterns and then run them through its database that stores facial profiles.

Facial recognition, although subject to criticism, is used in many products and for various purposes. Your smartphone uses it to identify you, social media networks use it to identify users, and security agencies use it to identify criminals.

-

Self-driving Cars

Machine vision is a critical component of self-driving cars, enabling them to sense their surroundings. The cameras on these cars capture images and videos from various angles and feed them into computer vision software. The software then processes this visual input to identify road margins, read and interpret signboards and traffic signals, detect pedestrians and other objects, etc.

Thus, self-driving cars use computer vision to avoid obstacles like other cars and pedestrians. It also ensures that there are no accidents and that the passengers arrive safely at their destination.

-

Healthcare

AI and its associated technologies are rapidly growing, disrupting many industries worldwide. AI in healthcare is transforming the sector, with automation streamlining tasks and machine vision enhancing medical outcomes.

A simple case of computer vision in healthcare detects cancerous skin lesions through skin images or X-rays/MRIs. Moreover, machine vision is being deployed by leading tech solution firms to create devices that can look after the elderly and sick via computer vision. These devices or robots monitor and detect healthcare changes in the elderly and suggest appropriate measures.

-

Mixed and Augmented Reality

It is vital in mixed and augmented reality (AR) technologies. These technologies use machine vision to identify real-time objects and ascertain their location on a display screen. Similarly, AR tools use machine vision to determine the depth and dimensions of real-time things.

The Challenges of Computer Vision

Despite the significant strides computer vision has made since its early days, it still doesn’t match human vision. That is because it’s a complex field with many challenges.

-

Complexity of the Visual World

Mimicking human vision is extremely difficult. The world around us is complex and intricate. We see objects from various angles and in different lighting conditions, so many possible scenes exist.

Building a machine that can process all possible visual scenarios is daunting.

-

Hardware Limitations

Computer vision algorithms require significant computation power. They need fast processing and architecture that can provide quick memory access. That would require hardware that can match those needs.

Currently, work needs to be done to bypass those hardware limitations.

-

Data Limitations

It trains its algorithms on gigantic datasets. It requires a large amount of data, which can be problematic when data is limited or unsuitable.

-

Learning Rate

The time required to train and test computer vision algorithms is another challenge. Errors also occur that take additional time to recognize patterns in images.

That’s a Wrap!

While it is true that the use of computer vision is growing by leaps and bounds, it still needs to match human vision in many ways. It is tough to build machines that mimic human behavior, vision, and cognition. Not to mention the difficulty we face in understanding ourselves and how our various bodily functions operate.

Nevertheless, machine vision is set to become monumental in the future, particularly in the healthcare and automobile sectors. Companies are pumping in loads of money to determine how this technology can help them achieve their strategic goals.

Are you also wondering how AI and computer vision can help your organization? If yes, contact us at [email protected] – we deliver innovative AI solutions to our clients, helping them achieve their goals.